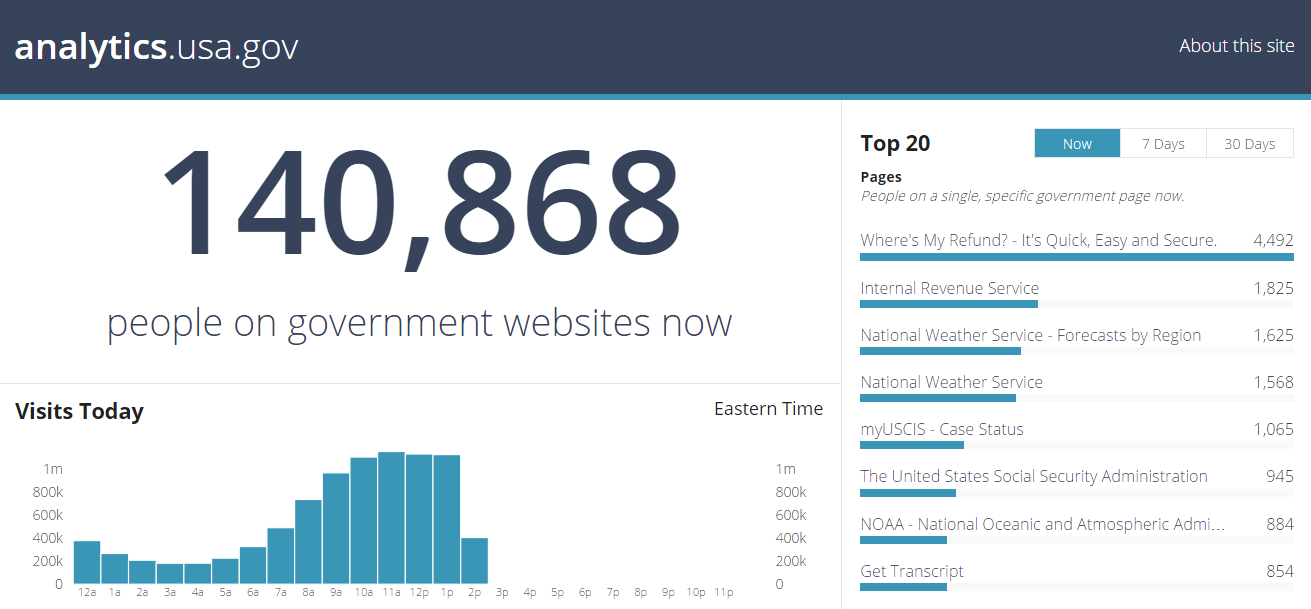

The U.S. federal government now has a public dashboard and dataset for its web traffic, at analytics.usa.gov.

This data comes from a unified Google Analytics profile that is managed by the Digital Analytics Program, which (like 18F) is a team inside of the General Services Administration.

18F worked with the Digital Analytics Program, the U.S. Digital Service, and the White House to build and host the dashboard and its public dataset.

You can read more on the White House blog about the project, and some insights from the data.

In this post, we'll explain how the dashboard works, the engineering choices we made, and the open source work we produced along the way.

A few important notes:

- The dashboard doesn't yet show traffic to all federal government websites, because not every government website participates in the program. Details on coverage are published below the dashboard.

- analytics.usa.gov was made in a very short period of time (2–3 weeks). There are clearly many more cool things that can be done with this data, and we certainly hope you'll give us feedback on what to do next.

- As the dashboard shows, the federal government has a very large Google Analytics account. Individual visitors are not tracked across websites, and visitor IP addresses are anonymized before they are ever stored in Google Analytics. The Digital Analytics Program has a privacy FAQ.

A nice-looking dashboard

The analytics.usa.gov dashboard is a static website, stored in Amazon S3 and served via Amazon CloudFront. The dashboard loads empty, uses JavaScript to download JSON data, and renders it client-side into tables and charts.

The real-time data is cached from Google every minute, and re-downloaded every 15 seconds. The rest of the data is cached daily, and only downloaded on page load.

So the big number of people online:

...is made with this HTML:

html

And then we use a whole bunch of D3 to download and render the data.

Each section of the dashboard requires downloading a separate piece of data to populate it. This does mean the dashboard may take some time to load fully over slow connections, but it keeps our code very simple and the relationship between data and display very clear.

An analytics reporting system

To manage the data reporting process, we made an open source tool called analytics-reporter.

It's a lightweight command line tool, written in Node, that downloads reports from Google Analytics, and transforms the report data into more friendly, provider-agnostic JSON.

You can install it from npm:

After following the setup instructions to authorize the tool with Google, the tool can produce JSON reports of any report defined in reports.json.

A report description looks like this:

javascript

And if you ask the included analytics command to run that report by name:

Then it will print out something like this:

javascript

The tool comes with a built in --publish command, so that if you define some Amazon S3 details, it can publish the data to S3 directly.

Running this command:

...runs the report and uploads the data directly to:

Real-time data is downloaded from the Google Analytics Real Time Reporting API, and daily data is downloaded from the Google Analytics Core Reporting API.

We have a single Ubuntu server running in Amazon EC2 that uses a crontab to run commands like this at appropriate intervals to keep our data fresh.

There's some pretty clear room for improvement here — the tool doesn't do dynamic queries, reports are hardcoded into version control, and the repo includes an 18F-specific crontab. But it's very simple to use, and a command line interface with environment variables for configuration gives it the flexibility to be deployed in a wide variety of environments.

Everything is static files

Our all-static approach has some clear limitations: there's a delay to the live data, and we can't answer dynamic queries. We provide a fixed set of data, and we only provide a snapshot in time that we constantly overwrite.

We went this route because it lets us handle potentially heavy traffic to live data without having to scale a dynamic application server. It also means that we can stay easily within Google Analytics' daily API request limits, because our API requests are only a function of time, not traffic.

All static files are stored in Amazon S3 and served by Amazon CloudFront, so we can lean on CloudFront to absorb all unpredictable load. Our server that runs the cronjobs is not affected by website visitors, and has no appreciable load.

From a maintenance standpoint, this is a dream. And we can always replace this later with a dynamic server if it becomes necessary, by which time we'll have a clearer understanding of what kind of traffic the site can expect and what features people want.

Usability testing

We went to a local civic hacking meetup and conducted a quick usability testing workshop. In line with PRA guidelines, we interviewed 9 members of the public and a handful of federal government employees. Any government project can do this, and the feedback was very helpful.

We asked our testers to find specific information we wanted to convey and solicited general feedback. Some examples of changes we made based on their feedback include:

- We changed the description of the big number at the top of the page from "people online right now" to "people on government websites now."

- We added more descriptions to help explain the difference between top 20 "pages" vs "domains." For the top 20 charts for the past week and month, we show the number of visits to an entire government domain, which includes traffic to all sub-pages within a government domain (for example, irs.gov and usajobs.gov). However, for the top 20 most popular sites right now, the team wanted to show the most popular single page within the government domains (for example, the IRS's Where's My Refund? page or USCIS's Case Status Online page).

- We indented and adjusted the look of the breakdown of IE and Windows data to simplify and clarify the section.

- We changed the numbers in the Top 20 section from "505k" and "5m" to actual, specific numbers (for example, 505,485 and 5,301,691). Testers often assumed any three-digit number was actually short for a six-digit number.

We certainly haven't resolved all the usability issues, so please share your feedback.

Living up to our principles

Open source: We're an open source team, and built the dashboard in the open from day 1.

- github.com/GSA/analytics.usa.gov - The dashboard itself.

- github.com/18F/analytics-reporter - The data reporting system.

All of our work is released under a CC0 public domain dedication.

Open data: All the data we use for the dashboard is available for direct download below the dashboard. Right now, it's just live snapshots, and there's no formal documentation.

Your ideas and bug reports will be very helpful in figuring out what to do next.

Secure connections: 18F uses HTTPS for everything we do, including analytics dashboards.

In the meantime, we hope this dashboard is useful for citizens and for the federal government, and we hope to see you on GitHub.